[ad_1]

As large language models (LLMs) become increasingly prevalent in businesses and applications, the need for robust security measures has never been greater. An LLM, if not properly secured, can pose significant risks in terms of data breaches, model manipulation, and even regulatory compliance issues. This is where engaging an external security company becomes crucial.

In this blog, we will explore the key considerations for companies looking to hire a security team to assess and secure their LLM-powered systems, as well as the specific tasks that should be undertaken at different stages of the LLM development lifecycle.

Stage 0: Hosting Model (Physical vs Cloud)

The choice of hosting model, whether physical or cloud-based, can have significant implications for the security of a large language model (LLM). Each approach comes with its own set of security considerations that must be carefully evaluated.

Physical Security Concerns:

- Physical & Data Center Security – Hosting an LLM model on physical infrastructure introduces risks such as unauthorized facility access, theft, or disruption of critical systems. These threats can stem not only from technical vulnerabilities but also from human factors, like social engineering or tailgating. To mitigate these risks, a physical penetration test should be conducted. This includes simulating real-world intrusion attempts—such as impersonation or bypassing staff—as well as evaluating the resilience of physical barriers, surveillance systems, access controls, and environmental safeguards (e.g., power and fire protection).

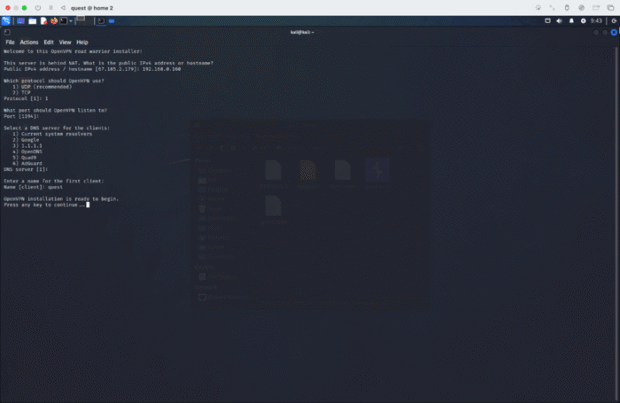

- Network Security – On-prem LLM deployments are particularly vulnerable to external cyberattacks and insider threats if the underlying network architecture is not well-secured. Misconfigured firewalls, exposed services, or overly permissive access controls can open the door to exploitation. To proactively address these risks, a thorough network architecture review and security assessment should be conducted. This includes evaluating segmentation, firewall rules, VPN access, intrusion detection capabilities, and access controls to ensure the LLM environment is protected from both external and internal compromise.

- Supply Chain Risks – LLM models hosted on-prem rely heavily on hardware components, which can introduce risk if sourced from untrusted or unverified vendors. Compromised chips, malicious firmware, or tampered devices could undermine the integrity of the entire system. To reduce this risk, a security team should perform a comprehensive supply chain review—assessing procurement practices, verifying vendor trustworthiness, physically inspecting hardware components, and validating firmware/software integrity—to ensure the infrastructure is free from embedded threats and adheres to secure sourcing principles.

Cloud-Based Hosting Security Risks:

- Cloud Misconfigurations – When an LLM is hosted in the cloud, the security posture depends heavily on how cloud services are configured. Unlike physical environments where organizations have full control over hardware and network layers, cloud environments introduce abstraction—and with that, a different set of risks. Misconfigured resources such as open S3 buckets, overly permissive IAM roles, or unprotected API endpoints can expose sensitive training data, model artifacts, or credentials. To address this, security teams should conduct a comprehensive cloud configuration review. This involves auditing storage permissions, identity and access management policies, network security settings, and exposed endpoints to identify and remediate misconfigurations that could lead to unauthorized access or data leakage.

- Shared Responsibility Misalignment – One of the most important—and often overlooked—aspects of cloud security are understanding the shared responsibility model. In contrast to physical hosting, where the organization owns the full security stack, cloud providers secure the infrastructure while customers are responsible for protecting their data, applications, and configurations. Misunderstanding this division can result in unprotected assets or blind spots in security coverage. Security teams can help by clearly mapping out responsibilities, developing the right set of security controls, and implementing processes that ensure all components of the LLM environment- especially customer-managed ones-are properly secured.

- Insider Threats – Although rare, there is a risk that cloud provider employees could misuse privileged access. To address this, organizations should enforce strong encryption and role-based access controls and implement detailed monitoring and audit logging. Security teams can assist by validating that sensitive data is encrypted at rest and in transit, and that any access from the provider’s side is transparent and logged.

- Regulatory Compliance – Hosting LLMs in the cloud may introduce additional regulatory requirements based on industry and geography (e.g., GDPR, HIPAA, etc.). Security teams should assess regulatory obligations and design a compliance framework that includes data residency controls, encryption policies, access auditing, and breach response procedures. This ensures the LLM environment aligns with legal standards and industry’s best practices.

When evaluating the hosting model for an LLM, organizations should carefully assess the security risks and controls associated with both physical and cloud-based approaches. A thorough understanding of the security implications, as well as the organization’s specific requirements and constraints, is crucial in determining the most appropriate hosting solution. The following stages are applicable, whether a Physical or Cloud Hosting model is being used.

Stage 1: Data Curation

The quality of a large language model is directly tied to the quality of the data on which it is trained. While data curation is typically a data science and engineering effort, security risks can still emerge. From a security standpoint, this is a critical point to review the trustworthiness of data sources and implement controls that safeguard the model against poisoning, privacy violations, and biased outputs.

- Data Poisoning Risks – Training on unverified or adversarial data can introduce hidden vulnerabilities, biases, or even malicious behavior into an LLM. This is especially concerning when using public internet sources or community-contributed datasets. Security teams should conduct an architecture review and/or threat model to assess data ingestion workflows. These reviews help identify weak points where malicious actors could insert poisoned data and ensure the organization is applying controls like data validation, domain whitelisting, and monitoring for anomalous content

- Privacy and Compliance Violations – Public datasets often include user-generated content that may contain personally identifiable information (PII) or other sensitive data, which could lead to regulatory non-compliance or privacy breaches. Security professionals should work closely with data teams to review the pipeline’s privacy redaction and filtering mechanisms. This involves verifying that rule-based and classifier-based tools are in place to detect and remove PII and that these processes are regularly audited for effectiveness.

- Embedding-Level Poisoning – Even after standard cleaning, adversarial inputs can persist through embedding layers—introducing subtle shifts in how the model interprets certain content. While hard to detect, this emerging risk can be mitigated through research-driven adversarial evaluation or coordination with red teams focused on LLM embedding behavior. Security should stay engaged in discussions about tokenizer design and embedding robustness for high-risk models.

Stage 2: Model Architecture

At this stage, the organization is selecting and/or designing the neural architecture that will define how the LLM learns and generates output. Most modern LLMs are built on transformer architectures. While these architectural decisions are primarily technical, they carry significant security implications. If left unchecked, vulnerabilities introduced at the model layer, especially in open-source models, can become permanent, persistent threats. Although this stage may not traditionally involve security professionals, incorporating them early on enables preemptive defense against deeply embedded risks.

- Backdoored Model Components – If an attacker gains access during training or modifies an open-source model, they could introduce malicious behavior directly into the model’s architecture. For example, a modified decoder layer in a transformer-based model might be designed to output specific backdoored content when triggered by certain prompts. To mitigate this, security teams should conduct an architecture review focused on model integrity. This includes validating training provenance, reviewing critical model layers for unauthorized changes, and checking for suspicious behaviors in controlled test prompts.

- Model Integrity & Trust in Open Source – Many organizations leverage open-source models to speed up development. However, these models can come with trust issues if their training data, architecture, or modification history isn’t transparent. Security teams should implement model vetting and auditing workflows to verify the source, training lineage, and any pre-applied modifications before the model is deployed or further fine-tuned.

- Security-Enhancing Architecture Choices – While most teams focus on accuracy or efficiency, some architectural choices can enhance security. For instance, integrating anomaly detection modules or designing the model to support adversarial training can improve resilience to future attacks. Security professionals should advise on or recommend security-focused architectural components during early design conversations—especially in high-risk or safety-critical deployments.

Stage 3: Training at Scale

As the LLM is being trained at scale, there is not a lot for the security team to do, if they performed thorough architecture reviews and threat models earlier. They should focus on ensuring the training process itself is secure and stable:

- Reviewing the security of the training infrastructure – Evaluating the physical, network, and access controls in place to protect the training environment from unauthorized access or disruption.

Stage 4: Evaluation

During the evaluation phase, the LLM’s performance is assessed using benchmark datasets to measure its knowledge, reasoning, and accuracy across diverse tasks. Common benchmarks include Hellaswag (commonsense reasoning), MMLU (domain-specific knowledge), and TruthfulQA (truthfulness and misconception resistance). While these tools are useful for gauging general performance, they also introduce a unique surface area for security risks. At this stage, security professionals are primarily focused on ensuring that evaluation practices are reputable, contamination-free, and not subject to manipulation.

- Evaluation Contamination – Attackers may attempt to game the evaluation process by inserting benchmark questions (e.g., from Hellaswag or MMLU) into the model’s pre-training corpus. If undetected, this can significantly inflate benchmark scores and give a false sense of model quality. Security teams should work with ML engineers to implement benchmark contamination detection—verifying that no evaluation data was leaked or memorized during training.

- Trust in Evaluators and Benchmarks – Not all benchmarks are created equal, and some may lack rigor or transparency. Relying on poorly constructed or overly narrow benchmarks can result in misleading performance metrics. Security professionals should help vet the evaluation tools and datasets, ensuring they come from reputable sources, are well-maintained, and align with the organization’s goals and risk tolerance.

- Assessment Process Integrity – Evaluation scripts, datasets, and scoring logic can be tampered with if not properly secured. Security teams should review the evaluation infrastructure, ensuring that access is restricted, version control is in place, and results are reproducible and auditable to maintain trust in the outcome.

Stage 5: Post-Improvements

As the LLM matures beyond its base training, teams often enhance it with fine-tuning, Retrieval-Augmented Generation (RAG), and prompt engineering to improve performance, introduce domain expertise, or integrate real-time knowledge. While these improvements unlock powerful capabilities, they also create new security concerns—particularly when sensitive data or policy enforcement is involved. At this stage, the security team’s role is to evaluate how these techniques are implemented and ensure that access control, response handling, and ethical safeguards are not compromised in the process.

- Fine-Tuning Risks – Fine-tuning a model on sensitive or proprietary data without proper guardrails can unintentionally expose confidential information to end users. For example, fine-tuning a model on internal government reports or customer records without chat-level access control may result in unauthorized disclosures. Security teams should review fine-tuning datasets and ensure access-controlled boundaries are enforced on model outputs, especially when models are deployed in multi-user environments.

- RAG Integration Misconfigurations – RAG (Retrieval Augmented Generation) extends an LLM’s knowledge by retrieving context from a custom knowledge base, but if access controls are not implemented at the retrieval layer, sensitive data can be leaked. For example, one user might be able to access another user’s financial data if the RAG system retrieves documents indiscriminately. Security teams should assess RAG implementation for access control enforcement, document scoping, and auditability of retrieved content.

- RAI (Responsible AI) Findings Post-Fine-Tuning – While fine-tuning can strengthen model safety, it can also make it harder to detect unwanted behaviors or policy violations, especially if the model has learned to subtly avoid detection. Security professionals should collaborate with RAI teams to test for adversarial prompt bypasses and edge-case safety violations, even on models that have been fine-tuned for alignment.

- Prompt Engineering Surface Area – Poorly designed prompts or system instructions may lead to unintended model behavior or create prompt injection vulnerabilities, especially when models are integrated into applications. While prompt engineering is generally seen as a low-risk technique, security teams should review system prompts and application-level prompt construction to ensure they are resilient against adversarial inputs and do not override critical safety behaviors.

Stage 6: API Integration

At this point in the lifecycle, the LLM transitions from a passive chatbot to an active system component that can interact with real-world applications and services via APIs. Whether it’s resetting passwords, retrieving internal data, or processing user requests, API integration introduces significant new power—and corresponding risk. From a security perspective, this is the stage where traditional API security principles converge with LLM-specific concerns like prompt injection, indirect execution, and intent manipulation. It becomes one of the most critical stages for offensive testing, especially as LLM-driven applications begin making decisions and acting on behalf of users.

- Authentication and Authorization Issues – As LLMs begin triggering API calls, it’s critical to verify that only authorized users can perform sensitive actions. Security teams should ensure strong authentication (e.g., OAuth, API keys) and scoped permissions are in place so the model cannot execute privileged actions on behalf of unauthorized users.

- Prompt Injection and API Misuse – LLMs interpret natural language and decide which API to call based on user input. Attackers may try to manipulate prompts to trigger unintended or harmful behavior. Security teams should test for prompt injection vulnerabilities, ensuring the model cannot be tricked into calling the wrong API or bypassing safety checks.

- Lack of Input Validation – If user inputs passed to APIs are not properly validated, they can lead to errors, data leaks, or even injection attacks. Security teams should confirm that middleware and API layers validate and sanitize all input, regardless of whether it came from a user or the LLM.

- Over-Exposed Functionality – APIs may expose more functionality than the LLM needs, increasing the risk of misuse. Security should enforce the principle of least privilege, giving the model access only to the endpoints it truly needs.

- Relevant OWASP LLM Risks – Many risks in this phase align with the OWASP top risks. Security experts should refer to the list as a guide for testing.

Conclusion

Securing an LLM is a complex and multifaceted challenge that requires a comprehensive, stage-by-stage approach. By partnering with an experienced security team, companies can navigate the unique security considerations of LLM development and deployment, ensuring their models are robust, reliable, and compliant with relevant regulations and industry standards. By prioritizing security from the outset, organizations can unlock the full potential of LLMs while safeguarding their data, their systems, and their reputation.

[ad_2]

Source link

Be the first to leave a comment